Running AI Locally With Llama.cpp

From Nothing to Running Local AI and Serving Over the Network to VS Code and AnythingLLM

If you need even more performance out of your Local AI then we need to shift to more powerful AI backends to run our models. More tokens per second means we can get answers faster and iterate sooner, letting us develop our knowledge, code, agents, or whatever else to do more in less time. I’ve strongly recommended Ollama to everyone here as it has the simplest setup starting from nothing for beginners and runs on Windows, Linux, and Mac easily and you don’t have to perfectly optimize it for your system, but for our next evolution we are going to use Llama.cpp to further enhance our AI performance and also gain access to more models on HuggingFace.

Llama.cpp is a project to create a faster backend for Facebook’s Llama based models written from the ground up in C++. It has many configurations and build options to suit a variety of hardware and generally performs inference faster, up to 1.8 times the performance. Additionally, if you are on Mac Llama.cpp supports the MLX framework as a primary goal, so this is ideal for you also for the best performance. While not as simple as Ollama, it offers access to a lot more models than Ollama does due to the HuggingFace community.

Models and Quantization

When new models are published on HuggingFace they are published with full precision 300GB+ safetensor files, but recent releases like DeepSeek R1 reached up to 600GB! These are not easily useable to the average consumer hardware public due to the size and the cost it would require to run them, so members of the community convert them to formats like GGUFs to make them smaller. The process of converting them to GGUFs allows other consumer backends to understand, like Ollama, llama.cpp, etc... In addition to changing the format they also quantize them, meaning they strip away some of the precision of the model to lower the size of the model. This sacrifice of precision makes it so that the model can run on less powerful hardware, but gain the ability run the model on lower RAM as needed. Since most consumers don’t have server grade GPU clusters, we typically have to use these quantized models to run on our GPUS or Macs.

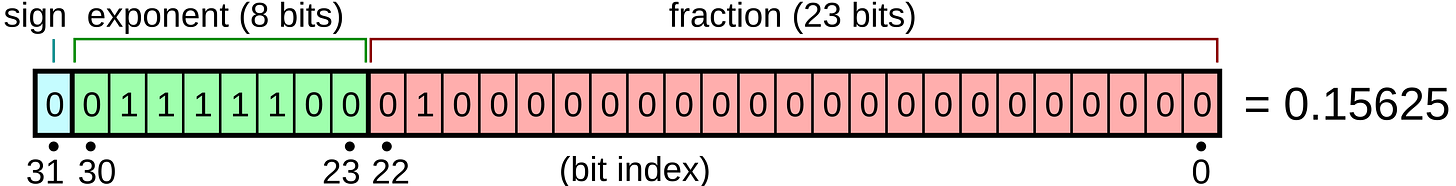

Think of this in terms of the music file formats. Music in .WAV are fully uncompressed audio, meaning the highest quality, but as a result gives massive file sizes as they are uncompressed. This is good for audio engineers making music who have high end equipment, but not for the average music listener who might just listen on a bluetooth speaker. Even a conversion to FLAC saves an immense amount of space on disk without sacrificing the audio quality for the user via compression. We could keep compressing the audio down to .mp3s and most people might not notice the difference but also save GBs of space on their mobile device. This is similar to how quantization is, yet each stage of quantization is much more noticeable than the human ear is to music quality. Some models when quantized spit gibberish at high levels of quantization as that removes the precision needed to answer correctly. The Tensors or “weights” of the models are essentially just massive amounts of matrix multiplications, with each cell containing FP32 numbers as shown below.

As we quantize down, we start lopping off the precision of this number. When we perform inference on LLMs after sending them our text in chat, it essentially performs an operation chaining together the most likely answer using these matrix multiplications to figure out the “correct” response, based upon the weights. So less precise weights = less precise answers, generally speaking. But a good quantization can still result in excellent result for the average consumer, and that’s why so much effort goes into this and enables Local AI. A less precise model, even running locally, can still yield excellent results to enable whatever you wish to do. As a caveat, there are limits to the amount of quantization you can do when working with different models sizes as you can see in the first graph in this post. Basically the smaller the parameters of the models, the less useful it is to quantize to certain amounts. This is essentially diminishing returns in regards to the model’s trained parameters and the loss of precision tradeoff.

In regards to Ollama and LLama.cpp, both use the GGUF model format to work with their backend. LLama.cpp can directly use GGUFS, while Ollama has another step involving a modelfile and the GGUF that needs to be made, so that’s additional effort needed for someone to do and then upload specifically on Ollama’s site. The modelfile models after Dockerfiles, and you can see the layers being made as you download a model, so it’s essentially a layered file system combined with the GGUF. The modelfile encodes things like the default chat template, signatures, config parameters, and other things, so you’re sort of up to the mercy of the person who writes this model file, though I believe most things can be overridden at runtime, or reconfigured if needed. One key note is this is one way to set the context size of your model via num_ctx. Whoever makes this modelfile can set this parameter, meaning if you need high amounts of context, you must adjust this to get better context. Since there’s a default context of 2048, you might hit that limit easily by accident and not know!

This video below explains more of the modelfile process with Ollama.

Since Ollama’s community is smaller then HuggingFace’s it will often take longer to get the model of your choice available if it’s new. The difference might be a few days, but if that matters to you then I would suggest to use Llama.cpp more. Additionally, add in quantization steps and it can be hard to get more specific models and their quants in Ollama available to you without getting your hands dirty. Backends like Llama.cpp will often have first dibs on newer models such as the recent DeepSeek R1 due to the massive amount of traffic HF gets and the large amount of users who are happy to quantize and upload models for us. HF also provides tools to do the quantization for you, making the process fast once a new model drops. Huggingface also contains many more models types and varieties than Ollama typically would. Add in the performance gains while trading some of the simplicity and you have a superior backend for hosting models in a more performany manner locally. I highly suggest if you want the best out of your models locally to switch over to a more performant backend such as Llama.cpp (or vLLM, exLlamav2), but that’s another post one day). Now that the differences between the two have been explained, lets go over setting up Llama.cpp.

Running Llama.cpp From Scratch

For this example we will be compiling Llama.cpp from scratch as a way to always get the latest features but more importantly understand how and why the software is built. Most of the time when we use a CLI tool, we don’t necessarily care about the underlying implementation or build process, but AI backends have many complexities when it comes to compiling for different hardware and their supported drivers/frameworks. This, along with the tuning model with config options will affect performance, that’s why I think it’s important to build it from scratch to learn much more about the software, otherwise you might get lost once you run into an issue.