Building your own Coding Copilot using Local AIs in VS Code

Get local chat and inline suggestions from multiple Local AIs to enhance your abilities

AI is an efficiency multiplier for getting work done. If you are unfamiliar with a topic it can fill you in on the known unknowns and let you discover what you don’t even know. If you do know about a topic then it can extend and enhance your train of thought, offer counter arguments, and predict the work your future work. On any range of the spectrum it is a catalyst for knowledge workers especially when it comes to coders.

In our previous Local AI article I showed how easy it is to run Ollama and get up and running within minutes with Large Language Models. Now we are going to harness those models to enhance our ability to code locally. By running the models ourself we can ensure that none of our data and code leaves the network. We can use GPT like chat interfaces with sensitive data such as passwords, internal resource names, and not leak out implementation design out to the rest of the world. No longer do we have to self censor and hand fix data before we send it off to an untrusted backend. We can keep our data out of the hands of OpenAI and other companies who might not have the best intentions and privacy policies, or might train their AI on our work. We can preserve our privacy.

For this to work we are going to use two different models so lets pull them before we get started. Keep in mind llama3 will be semi-broken in Continue until we apply a bug fix later on in this article.

ollama pull llama3

ollama pull starcoder:3bUsing VS Code with Local AI

Within your VS Code we are going to install the Open Source extension Continue. I have been using Continue recently on a small python project and it has helped me get up to speed much faster as I’m not a regular python dev. Please don’t take the python code seriously, it’s pre-pre-alpha. Also note that Continue works with Jetbrains products too, so follow that setup if you don’t want to miss out in your favorite IDE.

Continue as an extension adds inline suggestions with tab completion, a chat interface with context, and allows you to use remote AI, such as ChatGPT or Claude or more importantly local AI that we run.

Feature Demo

As far as using the extension I used the chat interface to help plan out the structure of the project, for library suggestions, and also to apply change it suggest directly into my code. Often the hardest part of a project for me is just starting and getting over decision overload. By letting the AI make some initial decisions I can worry more about implementation than getting bogged down on starter code boiler plate. One of the killer features is that it indexes your entire codebase for context, and you can also feed it specific lines of code and ask questions on it, and apply those changes inline. See a small demo below where I ask about a specific class and what it does.

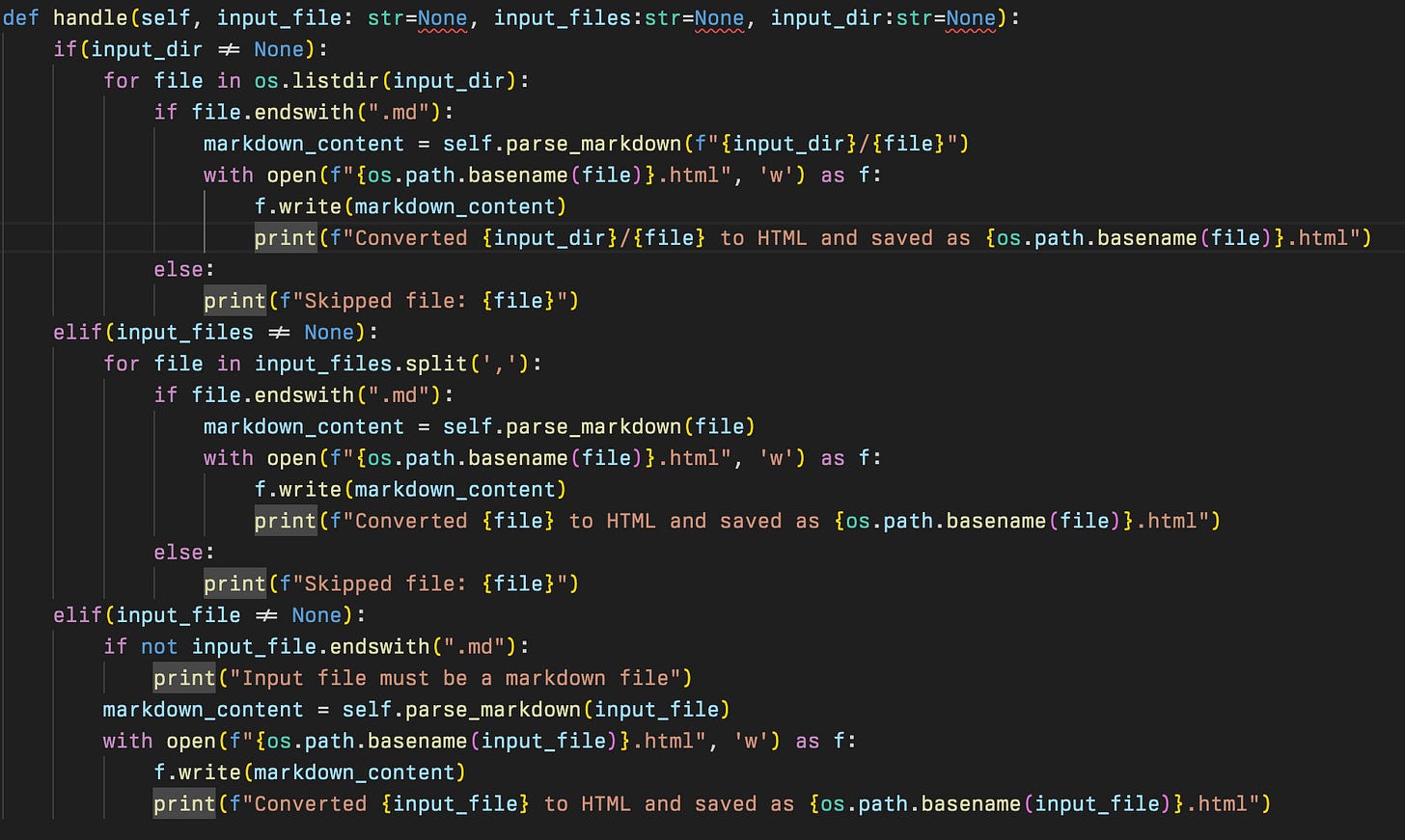

I can also ask it about specific functions or ask more general questions with @ Codebase tag. If the chat interface notices any code that can be created it will allow me to copy it, apply it, or ignore it. When applied it is as a diff where I can accept or reject the suggestion. You can see below as I asked AI to generate some code for me. It’s not perfect of course as I had to make some indentation adjustments, but overall that was much quicker than me writing the whole thing out. It’s a pretty contrived example for showing everyone, but other times I have found this useful just as part of my workflow without thinking about it.

Let’s reflect that this was all generated via my nvidia 3090 graphics card running an 8 billion parameter model from Facebook. There are many better models that can help you but this keeps us private and secure from prying eyes and we can always run stronger models.

Additionally, the inline code completion is also powerful from Starcoder2. I had to write a 3 branch if/else statement processing command line input of single file path, multiple file paths, and a directory of files. The inline suggestions from starcoder2:7b kept nailing every suggestion as I kept updating the next line to follow the flow of the program. Of course this code could be refactored better to have less duplication but progressive iteration is how most great software was made, 1 step at a time here.

So lets actually walkthrough how to configure this locally for you.

Configuration

After you have Continue installed into VS Code it will ask you setup an AI backend. If you wish to go any online provider such as ChatGPT or Claude, then follow the instructions for that and you are done. If you are hosting Ollama on the same computer as VS Code, follow the flow for Ollama and follow the llama3 fix later. However for me I am going to use my server on my local network with Ollama, which requires a little more work. I will cover serving Ollama on network further down, but for now just follow the Ollama flow also. Open the extension on the right side panel where Continue suggested you put it. Then click on the settings icon in the bottom right to open up the JSON config file. We can now configure any additional AIs that we need.

We are going to add our own Ollama based AIs here. Since we are using llama3 we need a specific entry as we have to fix a bug in it to get it fully working. In the config.json go to “models” element and add a new entry like below. If you wish to keep other AIs, just append this new item in list. See below.